GET A FREE CONSULTATION

Posted by Sandeep Sharma

February 26, 2025

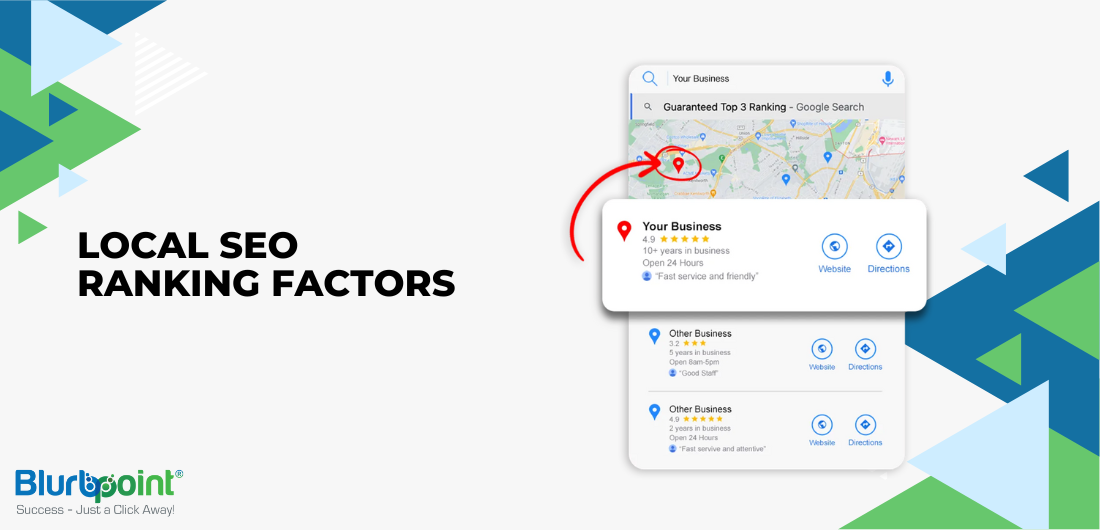

For local businesses, navigating the digital world can feel overwhelming, especially when competing with bigger brands. However, there’s a secret...

Posted by Sandeep Sharma

February 11, 2025

Tired of getting lost in the online crowd? This guide will show you how to master local SEO and attract...

Posted by Sandeep Sharma

January 22, 2025

Local SEO remains a crucial factor for businesses aiming to dominate their local markets in 2025. Yet, despite its importance,...

Posted by Sandeep Sharma

January 10, 2025

The local search landscape is constantly evolving. Don’t get left behind! This 2025 Local SEO Checklist will equip you with...

Posted by Sandeep Sharma

December 08, 2024

As we enter the final quarter of 2024, the question “What is AEO?” has become increasingly relevant for businesses striving...

Posted by Sandeep Sharma

November 24, 2024

Understanding and improving your SEO score is fundamental to achieving higher visibility in search engine results. But what exactly is...

Posted by Sandeep Sharma

November 06, 2024

In 2025, increasing organic traffic is no longer optional. It’s essential. As digital marketing evolves, so do strategies for attracting,...

Posted by Sandeep Sharma

October 27, 2024

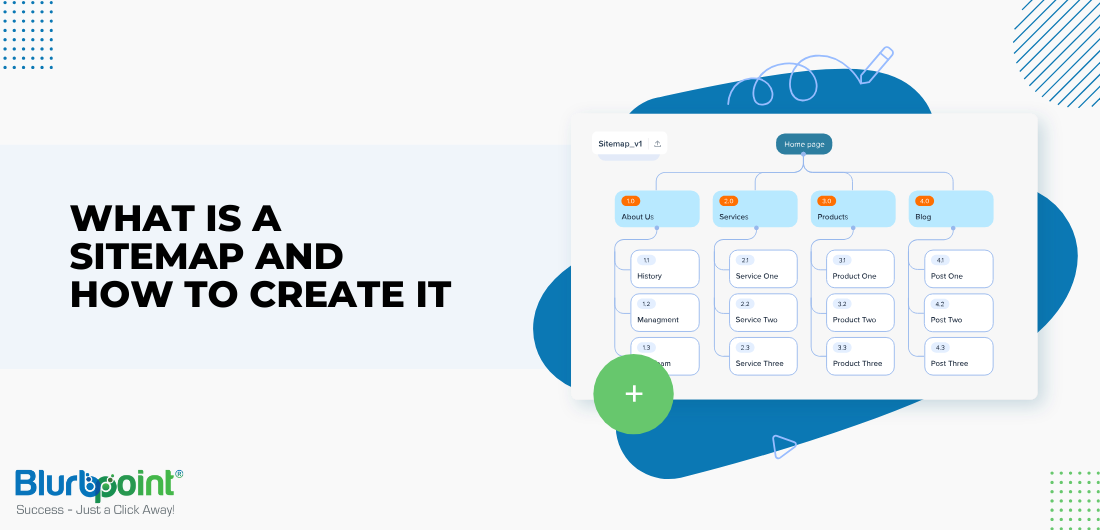

A well-structured sitemap is a fundamental tool for optimizing website performance and search engine visibility. Yet, many website owners are...